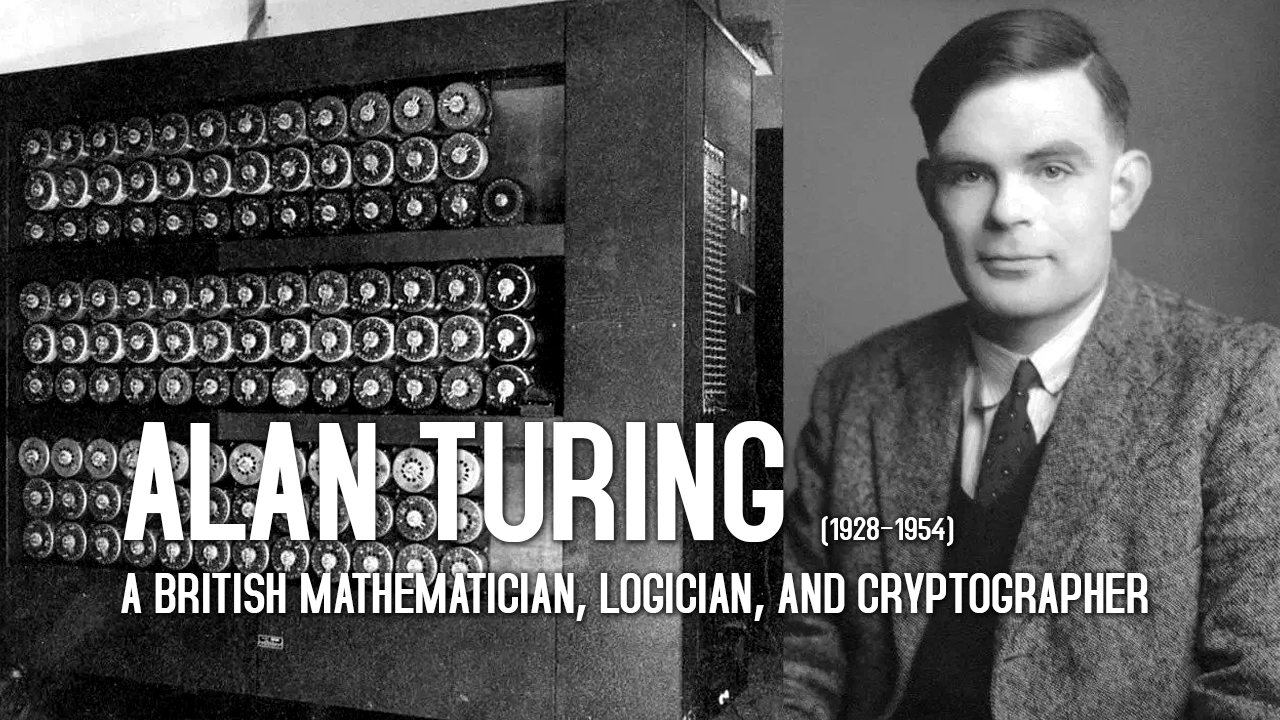

A British mathematician, logician, and cryptographer

Alan Turing (1928–1954), a British mathematician, logician, and cryptographer, stands as a foundational figure in the development of modern computing and artificial intelligence (AI). Born in London, Turing grew up in a family of scholars, which nurtured his intellectual curiosity. His seminal work on formal systems and computation laid the groundwork for disciplines that continue to shape technology today. Turing’s most celebrated contributions include the conceptualization of the Turing machine, a theoretical model of computation, and his pivotal role in breaking Nazi encryption during World War II. As a codebreaker at Bletchley Park, he devised the Bombe machine, significantly shortening the time required to decipher Axis military codes. His work during this period, though shrouded in secrecy, exemplifies the intersection of mathematics, engineering, and wartime strategy.

Beyond his wartime achievements, Turing’s legacy extends into the realm of theoretical computer science. He formalized the notion of algorithms and proved that certain problems, like the Entscheidungsproblem (decidability), are inherently unsolvable by mechanical means. This work, encapsulated in his 1936 paper On Computable Numbers, laid the groundwork for the field of computability theory. His 1948 paper Computing Machinery and Intelligence, in which he introduced the Turing Test—a criterion for determining whether a machine can exhibit intelligent behavior—remains a cornerstone of AI philosophy. Turing’s vision of a “thinking machine” anticipated modern neural networks and machine learning, though he never fully anticipated the societal and ethical ramifications of his ideas.

Turing’s life was marked by both brilliance and tragedy. After his arrest in 1952 for “thoughtcrime” (engaging in an activity deemed immoral under British law), he was subjected to chemical treatment that impaired his mental capacity. Despite this, he continued his work in cryptography until his death in 1954. His struggles highlight the tension between intellectual freedom and societal conformity of the time. Today, Turing is celebrated as a pioneer whose work transcends disciplines, influencing fields from quantum computing to bioinformatics. His legacy is preserved in institutions like the Alan Turing Institute, which continues to advance AI research and ethical frameworks.

The implications of Turing’s inventions are profound and far-reaching. His conceptualization of the Turing machine revolutionized understanding of computation and logic, inspiring generations of computer scientists. The principles he outlined—such as the universality of computation and the importance of formal systems—underpin modern algorithms and AI architectures. Moreover, Turing’s advocacy for human-machine interaction, particularly through the Turing Test, has spurred debates on AI ethics, bias, and the future of employment. As AI systems become increasingly sophisticated, Turing’s insights remain relevant in addressing challenges like algorithmic fairness, data privacy, and the philosophical question of machine autonomy. Ultimately, Turing’s work underscores the transformative power of interdisciplinary thinking and the enduring quest to harness computational power for societal benefit.