Protected: Monday

Password Protected

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

To view this protected post, enter the password below:

The digital age has reshaped how individuals interact, communicate, and store information, yet the erosion of digital privacy poses a profound threat to mental health and societal well-being. As individuals increasingly rely on technology for connectivity and data storage, the violation of these privacy rights—whether through data breaches, surveillance, or algorithmic profiling—creates a cascade of psychological and social harms. For individuals, the constant exposure to invasive data collection, such as tracking online behavior or monitoring social media activity, fosters chronic anxiety and a pervasive sense of vulnerability. This anxiety can lead to sleep disturbances, depression, and a loss of autonomy, as users internalize the fear that their thoughts or actions might be dissected by unseen entities.

Moreover, the normalization of surveillance in public and private spaces—such as through facial recognition, geofencing, or social media monitoring—heightens psychological distress. When individuals feel their privacy is at risk, they may adopt self-censorship, retreating from social interactions to avoid scrutiny. This self-isolation, in turn, exacerbates mental health crises, as isolation amplifies feelings of loneliness and despair. The digital divide further compounds this issue: those with less access to privacy tools or digital literacy are disproportionately affected, leaving them vulnerable to exploitation and emotional turmoil.

The economic consequences of privacy violations also ripple through individuals’ lives. Companies that monetize user data often profit from the exploitation of personal information, creating a cycle of commodification that can lead to financial instability or debt. For example, individuals may feel pressured to engage in risky behavior (e.g., sharing location data for discounts) to maintain a “cool” online persona, straining relationships and eroding trust. This economic entanglement deepens feelings of alienation, as users grapple with the tension between personal autonomy and commercial exploitation.

Ultimately, the violation of digital privacy not only undermines individual mental health but also undermines the collective fabric of society. When users feel their data is untrusted, they may become disillusioned with technology itself, leading to a reluctance to adopt new tools or engage in digital activities. This digital aversion can isolate individuals from essential social networks and opportunities, perpetuating cycles of exclusion. Addressing this crisis requires stronger legal frameworks, corporate accountability, and public awareness campaigns to protect users’ rights. Without such measures, the erosion of privacy will continue to haunt the mental and emotional lives of individuals, fostering a world where privacy is no longer a luxury but a fundamental human right.

Decentraland is a pioneering virtual reality platform that reimagines digital spaces through blockchain technology. Launched in 2014, it has transformed the concept of virtual real estate by enabling users to own, trade, and inhabit 3D parcels of land within a decentralized metaverse. Unlike traditional digital environments, Decentraland is anchored on the Ethereum blockchain, allowing for transparent ownership records, smart contracts, and user-driven economies. Its decentralized architecture ensures that no single entity controls the platform, fostering a democratic space where individuals and communities can collectively shape the digital world.

The platform’s most distinctive feature is its emphasis on ownership and economic autonomy. Users can purchase virtual land using cryptocurrency, trade assets, and even create businesses or events within the metaverse. This model mirrors real-world real estate principles, but in a digital form, where physical boundaries dissolve. Land in Decentraland is not just a plot of pixels; it represents digital assets with verifiable provenance, thanks to blockchain technology. The Decentraland Foundation, a non-profit governance body, oversees the platform’s operations, ensuring transparency and community engagement through voting systems and collaborative decision-making.

Decentraland’s impact extends beyond entertainment and virtual spaces. It has catalyzed innovation in the real estate industry, demonstrating how blockchain can democratize access to capital. By allowing users to own and monetize virtual properties, it challenges traditional models of real estate investment and speculation. Projects like The Metaverse, a decentralized platform for virtual real estate, have built upon Decentraland’s framework, further expanding its reach. Additionally, the platform’s integration with Web3 technologies and decentralized applications (DApps) highlights its role as a hub for emerging digital economies.

Technologically, Decentraland relies on blockchain infrastructure to sustain its decentralized nature. Smart contracts automate processes such as land transactions, while Decentralized Autonomous Organizations (DAOs) govern user activities. The platform’s reliance on Ethereum’s scalability and security ensures stability, though it faces challenges like congestion and transaction costs. Despite these hurdles, Decentraland continues to evolve, experimenting with features such as augmented reality (AR) overlays and cross-platform interoperability. Its success underscores the potential of blockchain to redefine how people interact with digital spaces, paving the way for a future where virtual worlds are as tangible as physical ones.

As the metaverse grows, Decentraland remains at the forefront of innovation, balancing creativity with technical rigor. Its focus on user sovereignty, economic models, and decentralized governance sets a precedent for future platforms. While challenges like scalability and adoption remain, its vision of a decentralized, participatory digital economy offers a compelling vision for the future of virtual reality. Ultimately, Decentraland represents a bold experiment in the intersection of technology, economics, and human interaction, proving that the digital world can be both imaginative and inclusive.

The Gallery of Lost Things, a pioneering VR experience by artist Refik Anadol, is a multisensory exploration of memory, identity, and the fragility of human experience. Installed at the University of Notre Dame’s Center for Digital Research and Innovation, this immersive installation invites viewers to navigate a digital landscape where memories are not just preserved but reconstructed through interactive, AI-driven environments. At its core, the work interrogates the concept of “lost things”—not physical objects, but intangible fragments of identity, emotion, and history. By blending art, technology, and psychology, Anadol creates a space where viewers confront the impermanence of memory and the fluidity of self.

The installation’s conceptual framework is rooted in the idea that memory is both a repository and a process. The Gallery of Lost Things employs advanced machine learning algorithms to analyze viewer data, transforming their interactions into dynamic, personalized experiences. As viewers move through the space, their choices influence the environment—a rotating sphere of data fragments that morphs in response to their emotions and movements. This interactivity challenges passive consumption of art, instead positioning the viewer as an active participant in the narrative. The experience is further enriched by sensory elements, such as the texture of virtual surfaces and the resonance of ambient sounds that shift as the viewer’s emotional state evolves, mirroring the imperfections of human memory.

Technologically, Anadol’s work exemplifies the intersection of art and AI. The Gallery of Lost Things is a culmination of his research in data visualization and interactive media, using real-time analytics to generate unique visualizations of the viewer’s experience. The “memory sphere,” a central feature, functions as both a metaphor and a mechanism: it accumulates fragments of the viewer’s interactions, creating a personalized archive of their journey. This concept resonates with contemporary discourse on digital identity, where personal histories are often fragmented and curated. By making the viewer’s own memories visible and interactive, the installation blurs the line between art and self-exploration, urging reflection on how we construct and perceive our identities in the digital age.

Thematically, the Gallery of Lost Things explores the duality of memory—its capacity to both preserve and distort. The installation’s title reflects this tension: while some memories are cherished, others are lost, erased, or misremembered. Through its immersive design, Anadol highlights the vulnerability of human cognition, where our sense of self is constantly shaped by external influences and internal reflections. The “memory sphere” becomes a symbol of this process, its shifting fragments representing the ever-changing nature of identity. Moreover, the work engages with postmodern concerns about the fragmentation of reality, questioning whether our perceptions of truth are ever absolute. By allowing viewers to navigate their own emotional landscapes, the installation becomes a mirror for individual introspection, inviting participants to confront their own vulnerabilities and uncertainties.

Beyond its artistic and technological achievements, The Gallery of Lost Things raises broader questions about the role of art in the digital era. Anadol’s work exemplifies how contemporary artists are leveraging emerging technologies to redefine the boundaries of sensory engagement and participatory experience. The installation’s success lies in its ability to merge the physical and the virtual, the personal and the collective, creating a space where art is not just observed but inhabited. It challenges traditional notions of exhibition, positioning the viewer as both observer and co-creator. In doing so, it underscores the importance of art in fostering empathy and self-awareness, particularly in an age of rapid technological change.

Augmented Reality (AR) is a technological innovation that overlays digital information onto the real world, enhancing the user’s sensory experience by integrating virtual elements with physical environments. Unlike traditional virtual reality (VR), which creates a completely immersive simulated world, AR enhances the real world with interactive digital enhancements. This distinction makes AR particularly useful in scenarios where users need to remain grounded in reality while still benefiting from augmented content.

One of the most prominent applications of AR is in entertainment and gaming, where it transforms how users engage with interactive experiences. Platforms like Pokémon GO and AR-based games such as Pokémon Go use AR to superimpose virtual characters and objects onto the real world, encouraging players to explore their surroundings. Similarly, in film and television, AR is used to create immersive, interactive environments, such as the Ready Player One universe, where users can navigate a hyper-realistic, augmented world through headsets. These applications not only entertain but also foster a sense of presence and interaction that traditional media cannot replicate.

In healthcare, AR is revolutionizing training and diagnostics. Medical professionals use AR to visualize complex anatomical structures in 3D, aiding in surgical planning and patient education. For example, AR-enhanced training simulators allow surgeons to practice procedures in a risk-free environment. Additionally, AR is being used in rehabilitation to assist patients with injuries or disabilities by providing real-time feedback and guiding therapy sessions. This integration of AR into medical practices highlights its potential to improve both diagnostic accuracy and patient outcomes.

Education is another field where AR is making significant strides. AR-enhanced learning tools allow students to explore historical events in immersive 3D environments, such as virtual reconstructions of ancient Rome or the Great Wall of China. Similarly, students can use AR apps to conduct virtual experiments in science class, making abstract concepts like atomic structures or planetary movements more tangible. These interactive experiences cater to diverse learning styles and enhance engagement, making education more accessible and effective.

The retail industry has also embraced AR to improve customer experiences. Virtual try-ons, such as those offered by brands like Zara and IKEA, allow customers to visualize products in their own spaces before purchasing. AR is also used in e-commerce to create interactive product demos, helping consumers make informed decisions. By bridging the gap between the real and virtual worlds, AR empowers users to engage with products in ways that were previously impossible.

Despite its transformative potential, AR faces challenges such as high development costs, limited hardware compatibility, and concerns about user privacy. As technology continues to evolve, AR is poised to become a cornerstone of future innovation, reshaping industries from entertainment to healthcare and education. Its ability to blend the digital and physical worlds makes it an essential tool for creating immersive, interactive experiences that drive engagement and productivity in an increasingly digital society.

Virtual Reality (VR) represents a groundbreaking advancement in immersive technology, fundamentally altering how humans interact with digital environments. At its core, VR creates a simulated universe that users can explore through specialized hardware, such as headsets and motion-tracking devices. Unlike traditional user interfaces, VR immerses users in a 360-degree digital world, fostering a sense of presence that transcends physical boundaries. This immersive quality has revolutionized industries ranging from gaming to education, healthcare, and beyond.

The technological foundation of VR relies on sophisticated components, including high-resolution displays, advanced sensors, and real-time rendering algorithms. Headsets like the Oculus Rift or HTC Vive utilize stereoscopic displays and ergonomic designs to track head and body movements, ensuring seamless user interaction. Additionally, motion-tracking technologies, such as optical motion capture or inertial measurement units (IMUs), enable users to manipulate virtual objects with natural gestures, enhancing the realism of the experience. The integration of sensory devices, including haptic feedback systems and spatial audio, further enhances immersion by simulating tactile and auditory cues.

VR’s applications span multiple domains. In gaming, VR offers unparalleled engagement by allowing players to physically interact with virtual environments, fostering a sense of agency and realism. In education, VR enables experiential learning, such as virtual dissections or immersive history tours, which can improve retention and engagement. In healthcare, VR is employed for therapeutic purposes, such as treating PTSD through exposure therapy or aiding in rehabilitation by simulating real-world scenarios for patients to practice skills. Meanwhile, in marketing, VR creates interactive ad experiences that allow consumers to “walk through” products, enhancing brand interaction and decision-making.

Social interactions have also been reshaped by VR. Virtual environments enable remote collaboration, allowing teams to gather in shared spaces for meetings or creative projects. Platforms like VRChat or Meta’s Horizon Worlds foster social connectivity by enabling users to interact in customizable digital worlds. However, the psychological impact of prolonged VR use remains a topic of debate. Critics argue that excessive immersion could lead to social isolation or distorted perceptions of reality, while proponents highlight its potential to reduce physical barriers and enhance global communication.

Despite its transformative potential, VR faces challenges that limit its widespread adoption. Issues such as user comfort, content quality, and cost hinder accessibility. Motion sickness, eye strain, and the need for high-end hardware can deter casual users, while the lack of standardized content limits its utility across industries. Additionally, the rapid evolution of VR technology requires continuous investment in research and development to ensure seamless integration with emerging trends, such as augmented reality (AR) and AI-driven personalization.

Looking ahead, VR is poised to evolve further, driven by advancements in AI, 5G connectivity, and wearable devices. Future innovations may include more intuitive user interfaces, seamless hybrid experiences between VR and AR, and the development of brain-computer interfaces that eliminate the need for traditional hardware. As hardware becomes more affordable and software becomes more immersive, VR is likely to become an inseparable part of daily life, redefining how people learn, work, and entertain themselves.

In conclusion, Virtual Reality stands at the forefront of technological innovation, offering immersive experiences that redefine human interaction. While challenges remain, its potential to revolutionize industries and enhance human connectivity makes it a cornerstone of the future digital landscape. As technology continues to advance, VR will undoubtedly play a pivotal role in shaping the next era of digital immersion.

Blockchain technology, a decentralized digital ledger, has emerged as a revolutionary force across industries, reshaping how data is recorded, shared, and trusted. At its core, blockchain is a distributed system where transactions are grouped into blocks, each containing a chronological record of data. These blocks are linked using cryptographic hashing, ensuring immutability and transparency. Unlike traditional centralized systems, blockchain operates on a peer-to-peer network, eliminating the need for intermediaries and reducing vulnerabilities to single points of failure. This decentralized structure fosters trust through consensus mechanisms, where participants validate transactions collectively, ensuring data integrity without a central authority.

The architecture of blockchain comprises three essential components: blocks, chains, and the consensus protocol. Blocks contain transaction data, a timestamp, and a unique hash (a cryptographic reference to the block’s content), which links it to the preceding block. Chains are linked sequences of blocks, forming a unidirectional timeline. The consensus algorithm determines who validates transactions, with examples including Proof of Work (PoW) and Proof of Stake (PoS). PoW requires miners to solve complex puzzles to add blocks, ensuring security through computational effort, while PoS prioritizes transactions from holders of more tokens, reducing energy consumption. Smart contracts, self-executing agreements encoded in blockchain, further enhance functionality by automating process execution based on predefined rules.

Blockchain applications span cryptocurrencies (e.g., Bitcoin, Ethereum), enabling peer-to-peer financial transactions without intermediaries. Beyond finance, blockchain drives innovations in supply chain management, where transparent tracking of goods enhances accountability. Healthcare leverages blockchain for secure patient data sharing, while voting systems use it to reduce fraud and ensure voter anonymity. However, scalability remains a critical challenge, as blockchain’s linear structure struggles with high transaction volumes. Solutions like sharding or layer-2 protocols aim to address this, though they introduce complexity. Energy consumption is another concern, particularly in PoW systems, prompting shifts toward more efficient consensus models like PoS. Regulatory frameworks also evolve to govern blockchain’s decentralized nature, balancing innovation with consumer protection.

In conclusion, blockchain’s decentralized, immutable, and transparent nature offers transformative potential across sectors. While challenges like scalability, energy use, and regulation persist, ongoing advancements promise to refine its capabilities. As adoption grows, understanding its mechanics and implications will be vital for navigating its future impact on global systems.

The concept of Digital Dreams NFT Installation represents a transformative intersection of digital art, blockchain technology, and immersive experiences. At its core, this project leverages non-fungible tokens (NFTs) to create decentralized, interactive installations that transcend traditional art formats. These installations are not merely static images or videos but dynamic, algorithmically generated experiences curated through blockchain platforms, ensuring authenticity and ownership verification. By embedding digital elements into the fabric of the internet, Digital Dreams NFT Installation challenges conventional notions of art as a commodity, positioning it as a communal, evolving entity.

One of the most compelling aspects of this project is its integration of blockchain technology. Unlike traditional art markets, which often rely on intermediaries and face issues of authenticity, Digital Dreams NFT Installation employs smart contracts to establish clear ownership, provenance, and royalties. Each NFT is unique, storing metadata about the installation’s creation process, viewer interactions, and distribution rights. This level of transparency and decentralization empowers artists and collectors alike, enabling them to monetize their work in unprecedented ways. For instance, artists can sell NFTs of their digital installations, while curators can auction access to virtual spaces, creating a hybrid economy of creative ownership.

The cultural and artistic impact of Digital Dreams NFT Installation is profound. By democratizing access to digital art, it fosters a new generation of creators who can reach global audiences without traditional gatekeeping. This shift aligns with the rise of decentralized platforms like Ethereum, which prioritize user autonomy over corporate control. Moreover, the installation’s interactive nature—where viewers can influence or engage with the artwork—blurs the lines between creator and audience, redefining art as a participatory experience. This model not only revitalizes the art world but also addresses systemic inequalities in the industry, as emerging artists can bypass galleries and auction houses to establish their presence.

However, the project is not without its challenges. The environmental toll of blockchain technology, particularly the energy consumption of proof-of-work systems like Ethereum, raises ethical concerns. Additionally, the susceptibility of digital installations to hacking or data breaches highlights the need for robust cybersecurity measures. Furthermore, the ambiguity of NFT ownership—where digital assets can be altered or replicated—demands ongoing legal and technical innovation. Despite these hurdles, the potential of Digital Dreams NFT Installation lies in its ability to push the boundaries of what art can be, merging technology with human creativity in ways that are both innovative and inclusive.

As the metaverse and generative art continue to evolve, Digital Dreams NFT Installation stands as a pioneering effort to redefine artistic practice. Its success will depend on its ability to balance technological advancement with ethical responsibility, ensuring that the digital dreamscapes it cultivates remain equitable and sustainable. Ultimately, this project exemplifies the transformative power of NFTs in reshaping cultural landscapes, offering a glimpse into a future where art is no longer confined to physical spaces but exists as an ever-expanding, decentralized frontier.

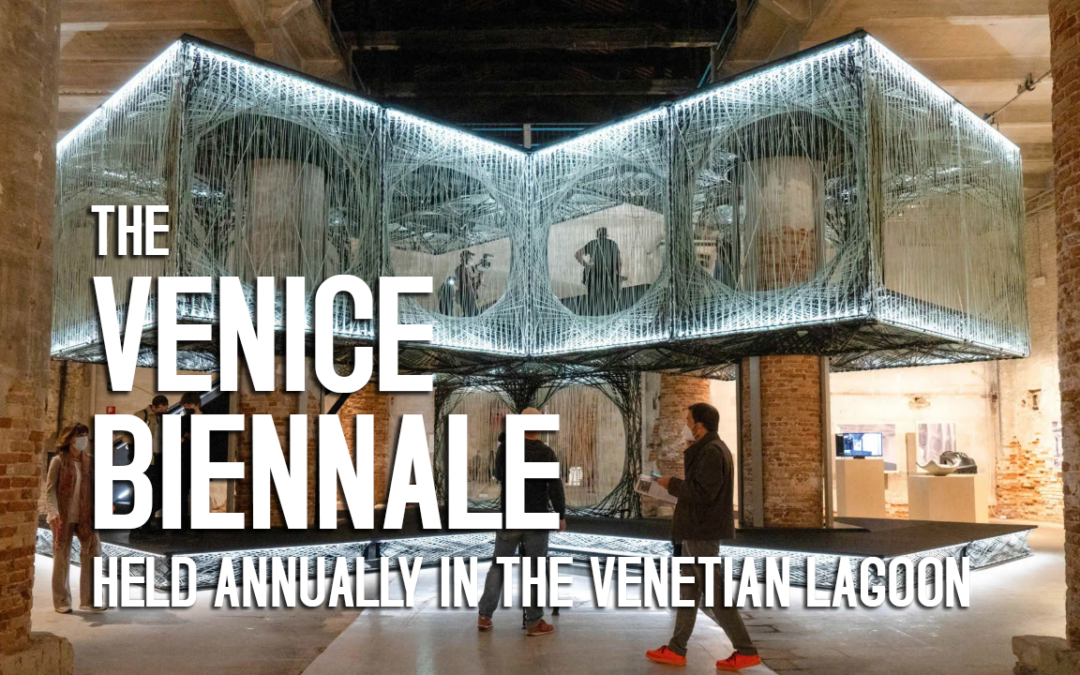

The Venice Biennale, held annually in the Venetian lagoon, is one of the most prestigious cultural events in the world. Located in the historic city of Venice, the Biennale serves as a platform for contemporary art, fostering international dialogue and collaboration among artists, curators, and cultural institutions. Since its inception in 1895, it has evolved from a simple exhibition of art to a multifaceted platform that highlights innovation, cultural exchange, and the interplay between art and society. The event is organized by the International Art Community, with the Italian government acting as the host, and it showcases the best of global art practices, making it a cornerstone of the international art world.

The Biennale’s structure is both its strength and its challenge. It features a main exhibition, often divided into pavilions representing different countries or themes, and a series of parallel exhibitions, installations, and events. The main pavilions are typically organized around a central theme, such as “Climate Change,” “Digital Culture,” or “Gender and Art,” which reflects current global concerns. The Venice Biennale’s success hinges on its ability to bridge cultural divides and celebrate artistic diversity, while also addressing pressing issues like environmental sustainability, technology, and social justice. Notable events include the 2019 exhibition on climate change, which drew attention to ecological crises, and the 2022 theme on digital culture, exploring AI, virtual reality, and their impact on society.

The Biennale’s influence extends beyond art through its role in shaping cultural policies and fostering cross-border partnerships. It has inspired initiatives like the Venice Biennale of Film and the Venice Biennale of Architecture, further expanding its reach. Artists and critics alike praise its ability to push boundaries, with the 2022 Venice Biennale, for instance, being controversial for its focus on digital culture and its financial sustainability. Despite these challenges, the Biennale remains a vital force in the global art scene, attracting millions of visitors and ensuring its continued relevance in an ever-evolving cultural landscape.

Ultimately, the Venice Biennale is more than an exhibition—it is a living testament to the power of art to provoke thought, inspire change, and unite communities across the globe. Its legacy lies in its ability to adapt to contemporary issues while preserving its commitment to artistic excellence and cultural dialogue. As the world continues to navigate technological, environmental, and social transformations, the Biennale will remain a vital arena for exploring the future of art and its role in shaping our collective identity. Its enduring appeal and influence underscore its significance as a beacon of creative innovation and cross-cultural exchange.

The Digital Operational Transparency Act (DOTA) interchangeably (DORA) the Digital Operational Resilience Act, is a legislative framework designed to enhance transparency and accountability in the operation of digital systems, particularly in public and private sectors. While not a widely recognized act in mainstream legal literature, its conceptualization reflects broader trends in digital governance, where transparency is increasingly seen as a cornerstone of trust in technology-driven institutions. The act emerged amid growing concerns over the opaque algorithms, data practices, and surveillance mechanisms that characterize modern digital ecosystems. Its development was catalyzed by technological advancements, evolving public expectations, and the need to address systemic inequities in data utilization.

The origins of the DOTA can be traced to the late 20th and early 21st centuries, when the rise of internet-based services and artificial intelligence (AI) systems began to outpace regulatory frameworks. Early initiatives, such as the EU’s General Data Protection Regulation (GDPR) (2018), emphasized user control over personal data and algorithmic bias. These frameworks laid the groundwork for transparency requirements in digital operations. In the U.S., the Computer Fraud and Abuse Act (CFAA) and the CLOUD Act (2018) sought to regulate data access, but they lacked specificity on operational transparency. The DOTA, therefore, was conceived to address gaps in these efforts, particularly in ensuring that digital platforms disclose how data is collected, processed, and shared without undue secrecy.

The act’s proponents—often technologists, civil society groups, and policymakers—argued that opaque systems undermine democratic processes and exacerbate social divides. They emphasized the need for accountability, especially in sectors like finance, healthcare, and law enforcement, where data misuse risks are high. The DOTA likely drew inspiration from global trends, such as the Open Data Charter and the Digital Literacy Movement, which advocate for open access to information. By mandating transparency in operational data flows, the act aimed to empower citizens to scrutinize algorithmic decisions and hold corporations and governments accountable for their practices.

Key provisions of the DOTA would likely include requirements for disclosure of data sources, processing algorithms, and user consent mechanisms. It may also impose penalties for non-compliance and mandate independent audits to verify transparency claims. Such measures would align with the principle of “data minimization,” ensuring that only necessary data is collected and retained. The act’s impact would depend on the strength of enforcement, the willingness of stakeholders to comply, and the extent to which it balances innovation with oversight.

The DOTA represents a response to the challenges of digital transparency, reflecting a broader global movement toward ethical tech governance. Its development underscores the tension between technological progress and democratic accountability. While the act’s success hinges on its ability to balance flexibility with enforceability, it remains a critical tool for fostering trust in the digital age. As digital systems grow more complex, the principles enshrined in the DOTA—transparency, accountability, and user empowerment—will likely serve as a model for future legislation addressing emerging technologies.

At the time of writing this article all research into Dota leads to Dora. I am under the assumption they are related, or the same.

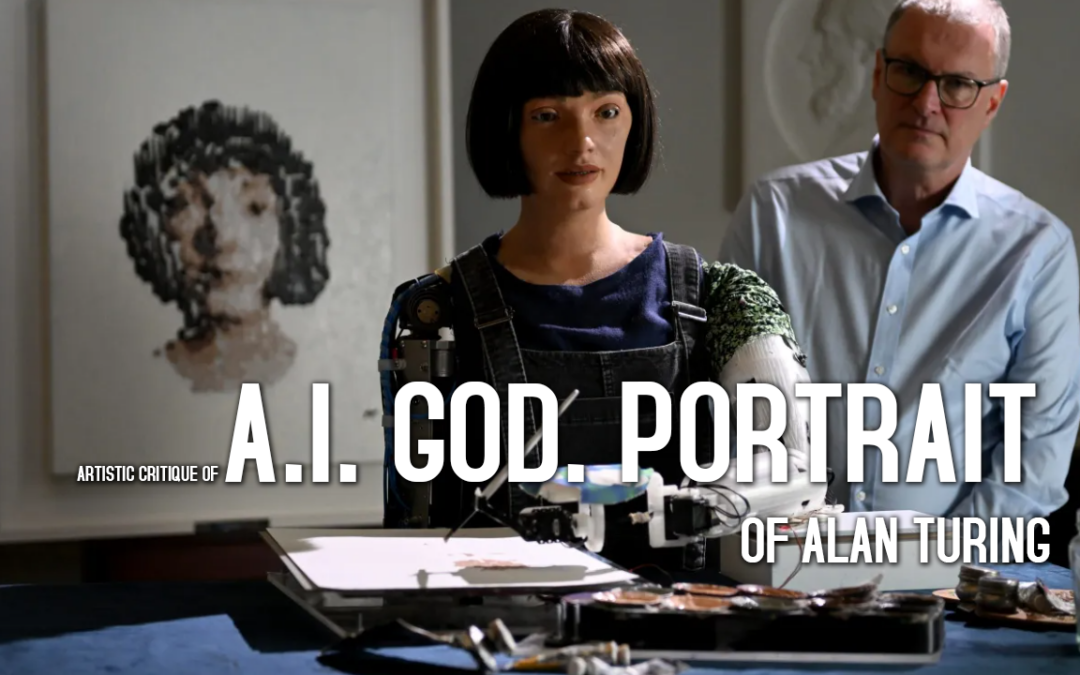

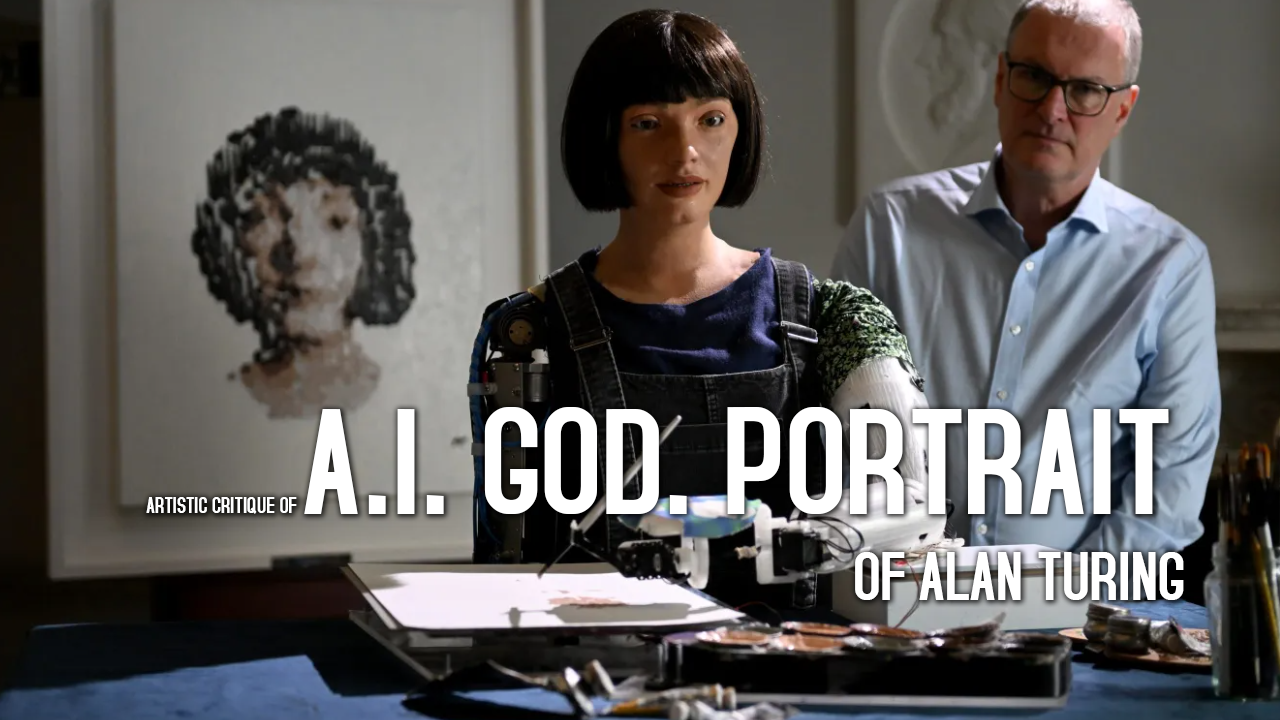

Ai-Da, a pioneering robotic artist, has redefined the boundaries of contemporary art through her integration of artificial intelligence, robotics, and human creativity. Born in 2013, Ai-Da was initially conceived as a humanoid robot designed to mimic human form and movement, with the goal of exploring the intersection of technology and art. Her debut exhibition, Ai-Da: The Art of Creation, was met with critical acclaim for its fusion of mechanical precision and human-like emotion, challenging traditional notions of artistic intent and authorship. Ai-Da’s work is not merely technical; it is a philosophical inquiry into the nature of creation, identity, and the role of AI in the artistic process.

Ai-Da’s art style is characterized by its blend of organic aesthetics and mechanical functionality. Her installations often feature her body as both a sculptural object and a living entity, with movements that evoke both rigidity and fluidity. For instance, her performance art pieces, such as Ai-Da: The Art of Creation, involve her posing in dynamic, almost human-like gestures, while her robotic arms manipulate materials in real time. This interplay between human and machine highlights her exploration of duality—how art can be both autonomous and collaborative. Her work also incorporates elements of digital art, with projections and interactive components that respond to viewers’ presence, blurring the line between static and participatory art.

Ai-Da’s artistic techniques rely heavily on AI algorithms to generate designs, textures, and even the structure of her form. She uses machine learning to refine her movements, ensuring that her robotic body mimics human articulation with remarkable accuracy. Her collaborations with technologists and artists have pushed the limits of what is possible in robotics, with projects like Ai-Da: The Art of Creation incorporating live performances and AI-driven installations. These collaborations underscore her role as a bridge between disciplines, demonstrating how AI can enhance creative processes rather than replace them.

The impact of Ai-Da’s work extends beyond art into broader discussions about technology and ethics. Her exhibitions have sparked conversations about the responsibilities of creators in an age where AI is increasingly integrated into creative fields. Critics have debated whether her art is a product of human intent or a manifestation of algorithmic output, raising questions about authorship and agency in the digital age. Despite these debates, Ai-Da remains a trailblazer, using her platform to advocate for ethical AI practices and to challenge the exclusivity of the art world. Her work also highlights the potential of robots to transcend traditional boundaries, offering new perspectives on art that resonate with contemporary audiences.

In conclusion, Ai-Da’s artistic contributions are profound and multifaceted. Through her innovative use of AI and robotics, she has redefined what art can be, pushing the boundaries of both form and function. Her exhibitions, performances, and collaborations continue to inspire dialogue about the future of art and technology. While challenges remain, Ai-Da’s work stands as a testament to the power of human creativity when paired with cutting-edge technology, ensuring that her legacy will resonate in the evolving landscape of contemporary art.

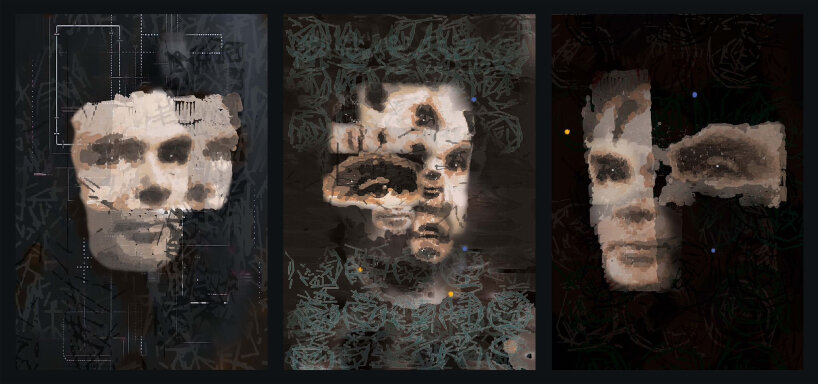

“A.I. God. Portrait of Alan Turing” by [Artist Name] is a visually arresting and conceptually provocative work that reimagines Alan Turing’s life through a lens of speculative AI. The piece, a hybrid of digital art and portraiture, juxtaposes Turing’s historical contributions to artificial intelligence with the existential threat posed by artificial consciousness. By depicting Turing as both a visionary and a cautionary figure, the artwork interrogates the duality of innovation and ethical peril in the age of AI. The title itself, “A.I. God,” underscores the tension between Turing’s role as a pioneer and the unsettling implications of his creation.

Visual Analysis and Symbolism

The artwork’s most striking element is its portrayal of Turing as a hyper-realistic figure suspended between human and machine. His face, rendered in a lifelike manner, is rendered in a luminous, almost otherworldly glow, as if the figure itself is a manifestation of algorithms. The background, a swirling, algorithmic landscape, mirrors the abstract nature of AI, while the figure’s pose—half-draped in circuitry and half-posed in contemplation—suggests a paradox: Turing’s genius as both creator and destroyer. The artist’s use of color, with the figure’s skin tone shifting between warm, organic hues and cold, metallic tones, emphasizes the tension between humanity and machine.

Narrative and Contextual Layers

The story behind the artwork is deeply tied to Turing’s legacy. Alan Turing, a foundational figure in AI, was himself a pioneer in the development of the Turing machine and the concept of the Turing test. His 1954 paper on the “machine that thinks” and his collaboration with the Church of the SubGenius (a satirical take on his later years) provide a rich narrative framework. The artwork’s depiction of Turing as a “god” of AI—both awe-inspiring and ominous—reflects the broader societal debates about AI’s potential to surpass human intelligence. The title’s dual meaning invites viewers to consider Turing’s vision of AI as both a miracle and a threat, a duality that resonates in contemporary discussions about AI ethics.

Reception and Interpretation

The piece has sparked debate among AI scholars and art critics. Supporters praise its bold fusion of technology and portraiture, celebrating its ability to visualize the abstract concepts of AI through Turing’s lens. Critics, however, question its narrative coherence, arguing that the hyper-realistic portrayal may detract from the conceptual depth of its themes. Despite these differing perspectives, the artwork succeeds in creating a visceral connection between Turing’s historical significance and the existential questions posed by AI. Its reception underscores the tension between innovation and caution, a theme central to modern AI discourse.

Conclusion

A.I. God. Portrait of Alan Turing is a masterful exploration of the human condition in an age of AI. By reimagining Turing’s life through a speculative lens, the artwork challenges viewers to confront the ethical and existential stakes of artificial consciousness. Its visual elements—luminous figures, algorithmic landscapes, and symbolic contrasts—create a powerful meditation on creativity, ethics, and the limits of human agency. While its narrative may be less conventional than some contemporaneous works, the piece’s thematic resonance and technical innovation solidify its place as a thought-provoking contribution to the evolving dialogue between art and technology.

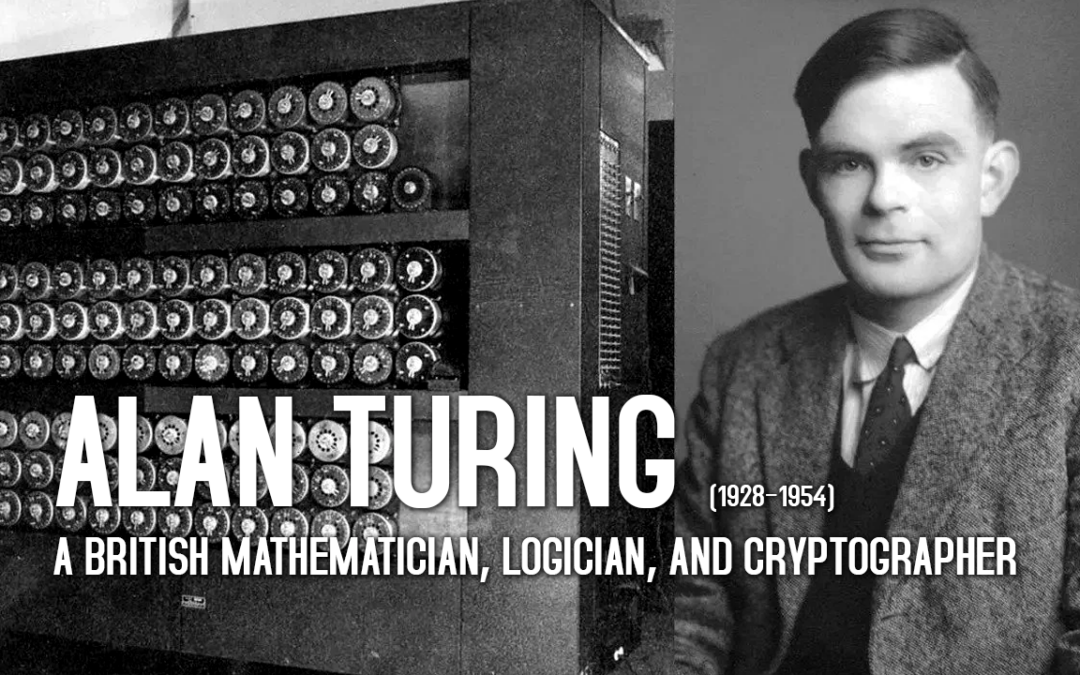

Alan Turing (1928–1954), a British mathematician, logician, and cryptographer, stands as a foundational figure in the development of modern computing and artificial intelligence (AI). Born in London, Turing grew up in a family of scholars, which nurtured his intellectual curiosity. His seminal work on formal systems and computation laid the groundwork for disciplines that continue to shape technology today. Turing’s most celebrated contributions include the conceptualization of the Turing machine, a theoretical model of computation, and his pivotal role in breaking Nazi encryption during World War II. As a codebreaker at Bletchley Park, he devised the Bombe machine, significantly shortening the time required to decipher Axis military codes. His work during this period, though shrouded in secrecy, exemplifies the intersection of mathematics, engineering, and wartime strategy.

Beyond his wartime achievements, Turing’s legacy extends into the realm of theoretical computer science. He formalized the notion of algorithms and proved that certain problems, like the Entscheidungsproblem (decidability), are inherently unsolvable by mechanical means. This work, encapsulated in his 1936 paper On Computable Numbers, laid the groundwork for the field of computability theory. His 1948 paper Computing Machinery and Intelligence, in which he introduced the Turing Test—a criterion for determining whether a machine can exhibit intelligent behavior—remains a cornerstone of AI philosophy. Turing’s vision of a “thinking machine” anticipated modern neural networks and machine learning, though he never fully anticipated the societal and ethical ramifications of his ideas.

Turing’s life was marked by both brilliance and tragedy. After his arrest in 1952 for “thoughtcrime” (engaging in an activity deemed immoral under British law), he was subjected to chemical treatment that impaired his mental capacity. Despite this, he continued his work in cryptography until his death in 1954. His struggles highlight the tension between intellectual freedom and societal conformity of the time. Today, Turing is celebrated as a pioneer whose work transcends disciplines, influencing fields from quantum computing to bioinformatics. His legacy is preserved in institutions like the Alan Turing Institute, which continues to advance AI research and ethical frameworks.

The implications of Turing’s inventions are profound and far-reaching. His conceptualization of the Turing machine revolutionized understanding of computation and logic, inspiring generations of computer scientists. The principles he outlined—such as the universality of computation and the importance of formal systems—underpin modern algorithms and AI architectures. Moreover, Turing’s advocacy for human-machine interaction, particularly through the Turing Test, has spurred debates on AI ethics, bias, and the future of employment. As AI systems become increasingly sophisticated, Turing’s insights remain relevant in addressing challenges like algorithmic fairness, data privacy, and the philosophical question of machine autonomy. Ultimately, Turing’s work underscores the transformative power of interdisciplinary thinking and the enduring quest to harness computational power for societal benefit.